After weeks of AI demos and case studies promising 10x gains, you still don't have a clear implementation plan. Your team needs concrete answers: which processes to automate first, how to maintain quality at scale, and whether to build or buy.

But when you try to build a real implementation plan, nothing feels concrete.

You're left wondering:

- Which processes should you automate first?

- Where should you start without overwhelming your team?

- Should you build custom solutions or buy off-the-shelf?

- How do you maintain quality control at scale?

For the State of AI Adoption report, we talked to 100+ B2B SaaS marketing and sales leaders through Wynter to understand what works in practice. What we found reveals a more nuanced reality: AI works best as a strategic tool that requires careful implementation and human oversight.

This article breaks down what we learned. You'll see where AI helps right away, where you need to keep humans involved, and how successful teams make these calls. This guide shows you what works based on real implementations across 100+ B2B teams.

1. Prioritize people to secure team buy-in

Most AI failures happen in the gap between purchase and adoption. The tool works fine, but your team refuses to use it.

They resist the change, find workarounds around the new workflow, or use it just enough to satisfy management. The software works, but the adoption strategy doesn't.

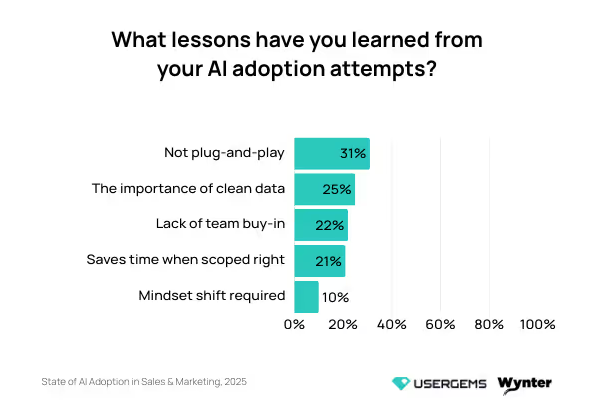

Our research confirms this. Nearly a third of respondents mentioned team resistance as their main problem when it comes to AI adoption.

This aligns with broader industry findings. McKinsey research shows that only 1% of executives describe their AI rollouts as “mature”.

Your team's concerns are legitimate. People want AI to act as an assistant that enhances their capabilities. The biggest barriers are the emotional responses, from job security fears to loss of creative control and brand voice.

These are predictable responses to change that require direct attention.

Here’s what one Director of Demand Generation (1001-5000 employees, SaaS/software) had to say about this in our research:

"AI can be super intimidating for team members to adopt. They know they need it, but they are worried it will replace them if they prove how effective it is. This has been the biggest hurdle."

Teams need time to learn the tools, space to figure out what works for them, and proof that AI will help (not hurt) their careers. Position it as support that enhances their work and advances their careers.

The practical steps you can take look like this:

- Start with transparency: Explain what you're trying to solve and why AI makes sense for those specific problems. Be honest about what might change and what won't. Present realistic benefits and acknowledge the learning curve upfront.

- Involve your team early: Let them test tools during evaluation and ask what would make their jobs easier. People support what they help create.

- Pick your pilot team carefully: Choose people who are open to change but also respected by their peers. You need credible voices who can share real-time experiences.

- Create feedback loops: Check in regularly during rollout. What's working? What's frustrating? What needs to change? Show your team you'll adjust based on their input.

- Celebrate early wins publicly: When someone finds a workflow that saves time or improves results, share it. Let your team see concrete benefits from their peers.

Real buy-in means voluntary adoption—people use the tools because they see value. Forced compliance leads to workarounds and minimal engagement.

Key takeaway: Technology problems are easier to solve than people problems. Team resistance comes from legitimate fears about relevance, autonomy, and quality control. Present AI as something that handles grunt work so your team can do more of what they're good at.

2. Treat AI as a strategic initiative, not a plug-and-play solution

Most AI vendors promise instant productivity gains. UserGems takes a different approach: we integrate with your existing stack, provide transparent scoring you can verify, and measure success by pipeline and revenue, not vanity metrics.

AI systems learn from your data and adapt to how you work.

AI systems learn from your data and adapt to how you work. That learning process takes time. You can't skip the training phase and expect good output.

As a Demand Generation Director (501-1000 employees, SaaS/software) from the survey put it:

"The setup and training of an AI tool is key. It takes time to train a system to your company's needs and business goals. You have to be invested for the long term and not expect results overnight. The challenge is always stakeholder education and getting buy-in."

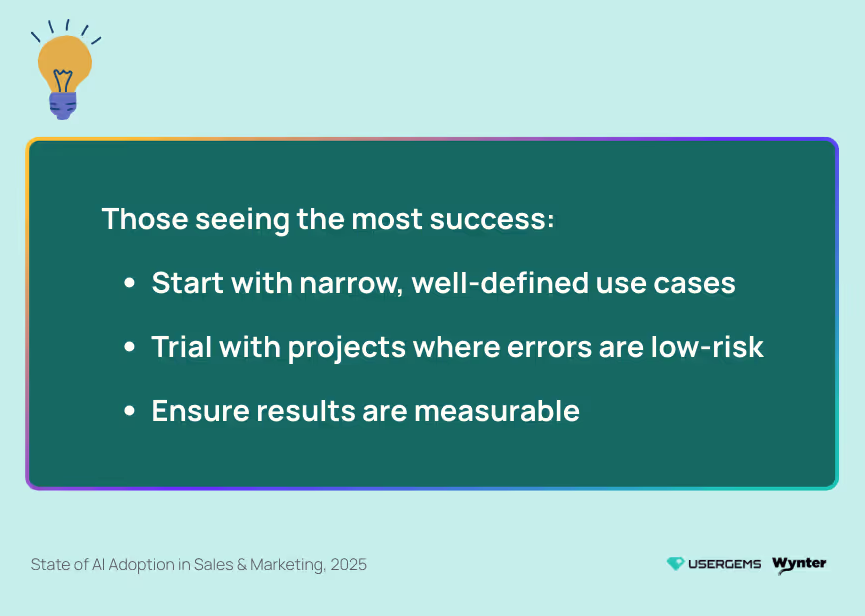

What works for most teams comes down to this:

- Pick one specific use case to start: Start with one specific use case and prove it works there first. Find a single business process where AI can help and prove it works there first. Then expand the AI applications to other areas.

- Test in low-stakes environments: Run AI on tasks where mistakes won't hurt customer relationships or break critical processes. Figure out what the tool handles well and where it falls short before you use it for anything important.

- Set clear success metrics and KPIs upfront: Decide what improvement looks like before you start. Track whether AI moves those baseline numbers. Specific business objectives let you measure whether the tool delivers value for your investment.

- Build in human review checkpoints: Require human review of all AI output before it reaches customers or enters production. Catch mistakes early while the stakes are still low.

Team leaders should expect a learning curve. AI needs ongoing coaching, oversight, and room to improve—just like any new team member.

One Head of Digital Marketing (501-1000 employees, SaaS/software) we talked to learned this lesson the hard way:

"Each AI tool I've run into has a 'training' period that takes longer than expected to get up and running. I've learned that people tend to underestimate how long to get things working fully under AI, so you don't provide bad customer experiences."

Key takeaway: AI isn't plug-and-play. It needs training, testing, and time to learn how your team works. Treat it like onboarding a new hire - set clear roadmap goals, start small, and give it room to improve before you expect real-world results.

3. Master your inputs with clean data and effective prompts

AI quality depends entirely on data quality. If your CRM is full of duplicate records, incomplete fields, and outdated information, AI will only multiply those problems.

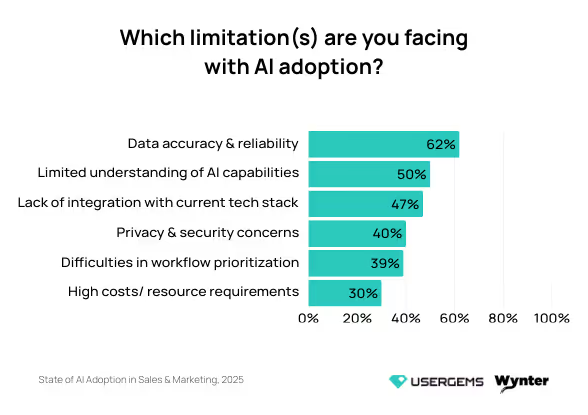

When we asked leaders what holds back AI adoption, 62% said that they don't trust their data.

A Senior Director of Revenue Operations (1001-5000 employees, SaaS/software) we talked to explained it clearly:

"Data quality is important for AI to work properly. CRM data, including validations and flows, needs to be structured for AI to deliver continuous improvements in productivity."

Fix data problems before implementing AI. Issues that seem manageable at small scale become systemic when AI amplifies them across your workflows.

Some of the things you can do:

- Audit your CRM for duplicates, incomplete records, and stale information

- Standardize how you format dates, phone numbers, and company names

- Set up validation rules that block bad data from entering your system

- Put someone in charge of data quality as an ongoing responsibility

For signal-based selling to work, you need accurate contact data and transparent scoring that shows why a buyer is flagged as ready. Generic enrichment tools don't provide the signal context that revenue teams need to act with confidence.

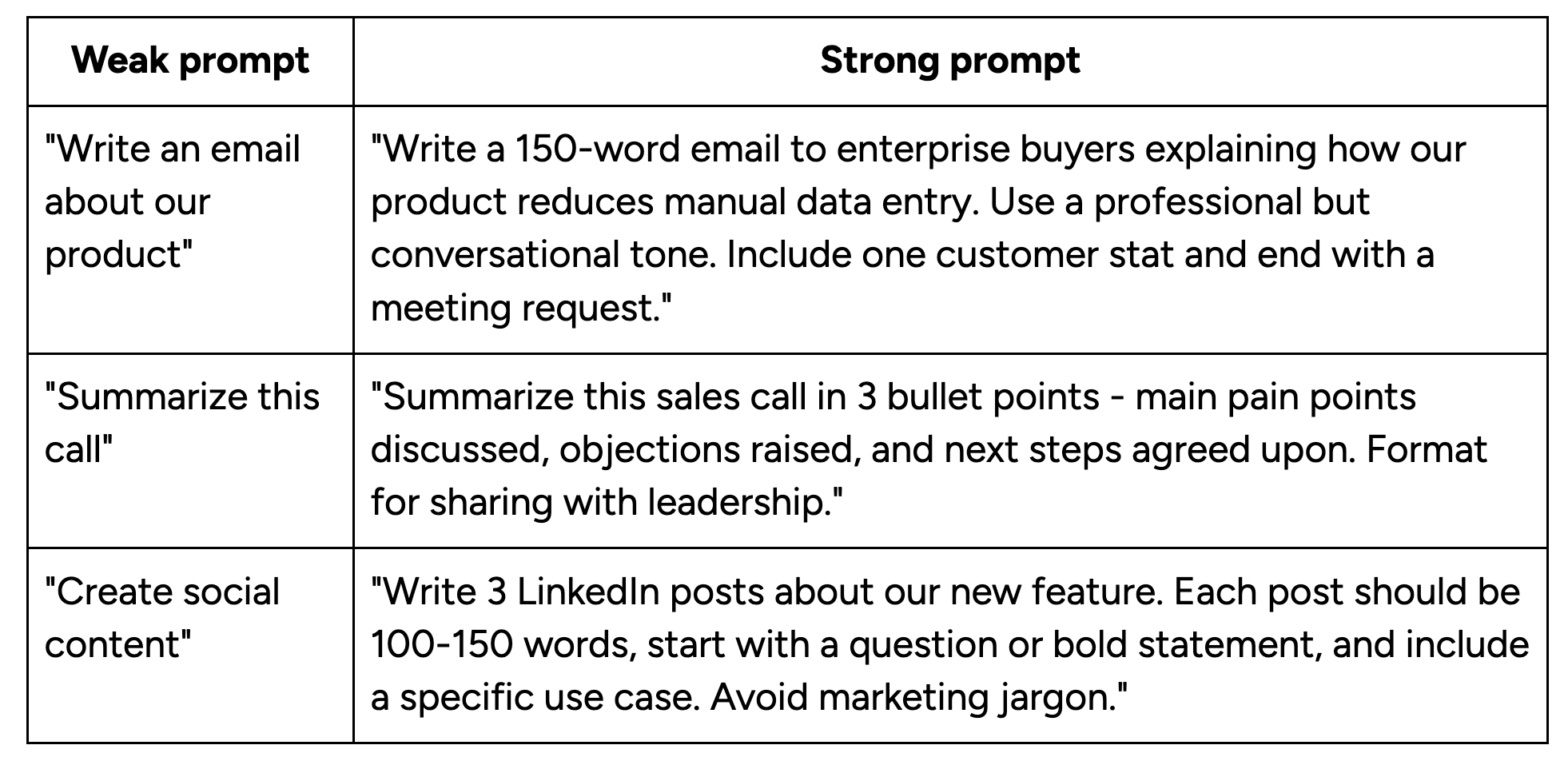

Even with perfect data, AI initiatives need clear instructions. Specific prompts that include context and constraints get you outputs that match your standards.

One Demand Generation Director (1001-5000 employees, SaaS/software) shared what they learned:

"I've learned that good prompts can mean all the difference when it comes to getting a desired outcome with AI tools. So, learning how to write good prompts helps with the efficiency of using the tools."

Weak prompts versus effective prompts:

Strong prompts specify the format, length, tone, audience, and purpose. They give examples of what to include and what to avoid. They provide enough context for the AI to match your standards.

Key takeaway: Your AI outputs are only as good as your inputs. Clean up your CRM first by fixing duplicates and standardizing data formats, then learn to write clear, specific prompts that tell the system exactly what you need.

💡 PRO TIP: UserGems handles data quality with proprietary verification that delivers 95% accuracy and keeps email bounces under 5%. Clean signals feed your AI from the start, which means your team can trust the tool's recommendations without spending hours validating data themselves.

4. Invest in team training and dedicated learning time

Teams buy AI tools and expect people to figure them out. Without proper training, your team uses a fraction of what the tool can do. Proper training enables your team to use the tool's full capabilities.

Nearly one in five teams (17%) are flying blind, saying they don't know the full potential and capabilities of what their AI tools can do for them.

This mistake is common. Leadership buys the tools and announces the rollout. Then they expect employees to figure it out while keeping up with their regular work. Most people need dedicated time to explore the tool's capabilities and move beyond surface-level usage.

A Director of Business Development (201-500 employees, SaaS/software) from our survey explained what works better:

Teams need time to develop new skills, adjust their processes, and figure out how AI fits their specific work. That's why good training programs include:

- What the tool does and where it fits in their daily workflow

- Common mistakes to avoid and how to troubleshoot when things break

- Practice runs with actual scenarios they'll encounter in their work

- Concrete examples from coworkers who've handled similar situations

- Time to test things without worrying about mistakes or productivity drops

Most organizations rush deployment instead of investing in competence. Assign someone to support the rollout, block time for practice, and treat skill development as part of the implementation budget.

Key takeaway: Block time for training and practice instead of expecting people to figure it out between meetings. The faster you want adoption, the more learning time you need to budget upfront.

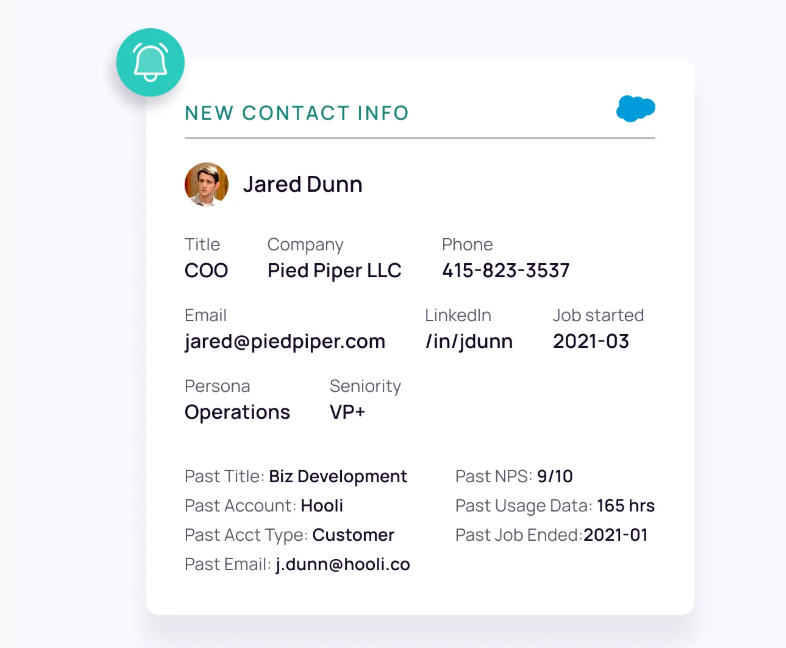

5. Prioritize integration with your existing tech stack

Most AI implementations automate one task but create three new manual ones. The AI generates outputs, but someone still has to move those insights into the systems your team already uses.

This Senior Marketing Manager (501-1000 employees, SaaS/software) from our survey went into more detail on this problem:

"One of the biggest gaps we've seen is integration. Many AI tools don't connect well with our existing tech stack, making it hard to embed them into real workflows. We also struggle with the lack of AI-ready flexibility. Most solutions don't adapt to how marketing teams operate day to day."

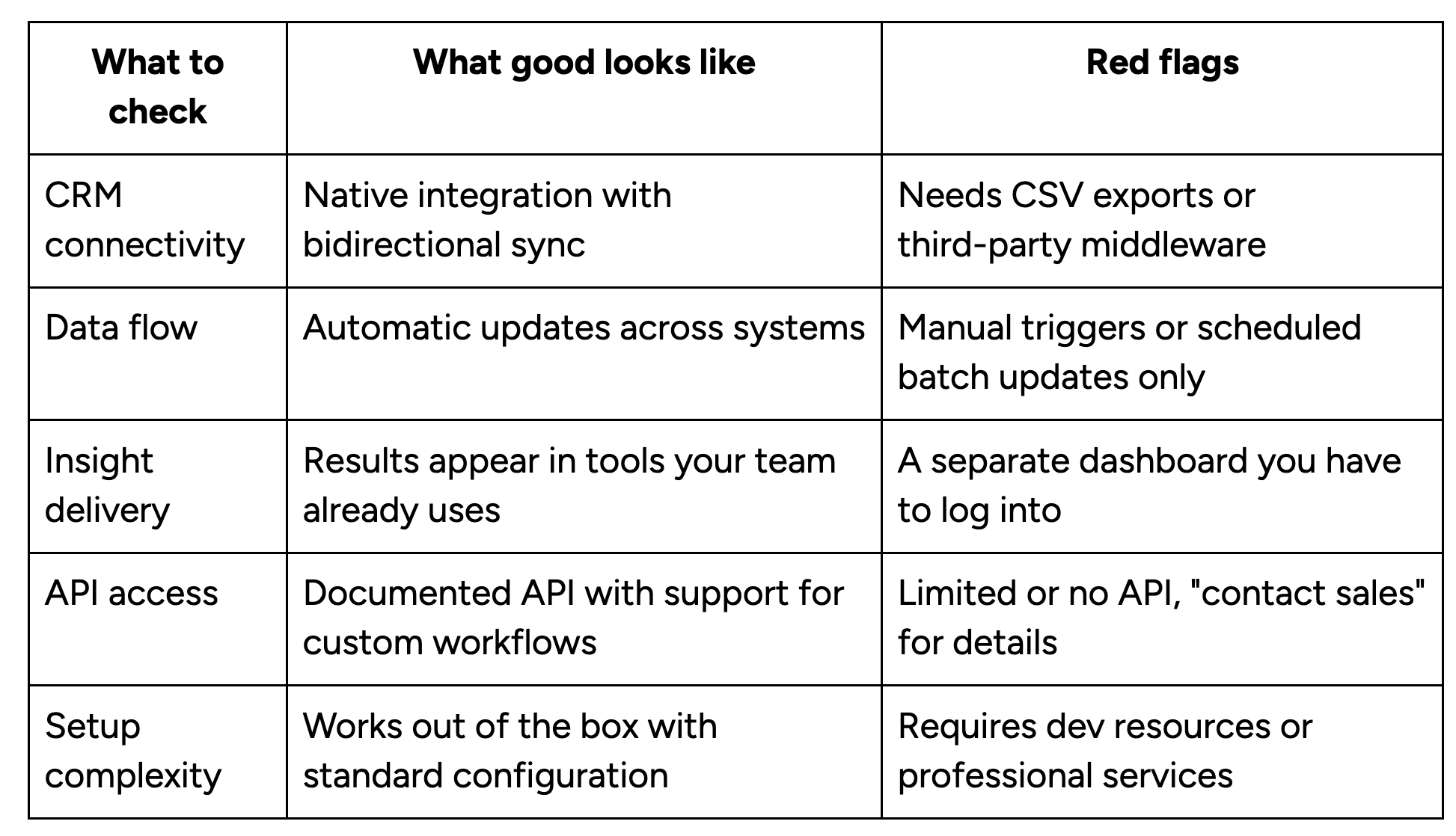

Before you commit to any AI tool, verify how well it connects to your existing systems. Here's what to evaluate specifically:

Document your existing workflow and data flows before vendor conversations start. Then, in demos, ask vendors to walk through integration with your tech stack.

Most teams overestimate their tolerance for manual workarounds. What feels manageable in week one creates significant friction by month three.

Key takeaway: Check for native integrations with your tech stack before you buy, not after. If the vendor says you need CSV exports or custom development work, keep looking.

💡PRO TIP: UserGems integrates natively with Salesforce, HubSpot, Outreach, and Salesloft to meet your team where they already work. Buying signals, tasks, and AI-generated messages appear directly in your existing tools, so reps never need to switch platforms or manually transfer data between systems.

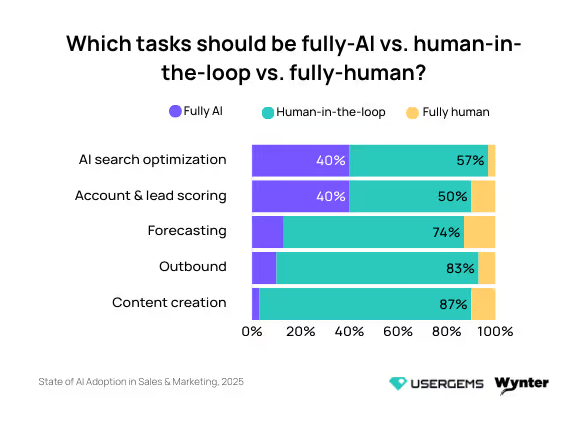

6. Implement human-in-the-loop workflows for critical tasks

We asked teams where they feel comfortable letting AI work independently versus where they want human oversight.

Analytical tasks like search optimization and lead scoring see split decisions, with about 40% comfortable letting AI work alone.

High-stakes work tells a different story. 84% of teams keep humans in control of outbound messages. Content creation shows even stronger preference for human oversight.

Teams don't yet trust AI for high-stakes work. As one Global Director of Sales Enablement (501-1000 employees, SaaS/software) put it:

"It's very early days. The trust I think we will have in AI to be more autonomous is coming, but it's not fully here yet."

This trust gap is consistent across revenue teams. Redditors in other industries share the same hesitation:

The best approach to building human-in-the-loop workflows is to match your oversight level to what's at stake. Ask what happens if AI gets it wrong, then design your review process around that answer:

- Categorize by consequence: What happens if the AI gets it wrong? If the answer is "minor inconvenience," you can automate more freely. If the answer is "damaged customer relationship”, you should keep humans in control.

- Match review intensity to risk: High-stakes tasks need individual review of every output. Medium-risk work can use sample checks. Lower-risk processes need only periodic audits to find recurring problems.

- Set clear approval thresholds: Define when AI acts independently and when it needs sign-off. AI might auto-score low-value leads but flag enterprise accounts for manual review.

- Design for efficiency: Review processes that create bottlenecks defeat the purpose of AI. Build review into workflows people already use and flag only the outputs that need human judgment to drive innovation.

Watch for signs you've automated too much. If error rates climb, customer complaints increase, or your team loses confidence in AI outputs, pull back. You can always expand automation later as tools improve and trust builds.

Key takeaway: Teams trust AI for analysis but not for customer-facing work. Build review checkpoints based on what happens if the AI screws up. Low-stakes tasks can run automatically, but anything that touches customers or affects relationships needs human approval before it goes out.

7. Designate and protect 'human-only' zones

Some tasks require human judgment and creativity, even when AI can technically handle them. In our survey, we found specific types of work where leaders refuse to hand control to machines.

Four types of work consistently stayed off-limits:

- Creative work and brand voice: 29%

- Customer and buyer communication: 21%

- Relationship building: 13%

- Strategic thinking and decision making: 12%

For example, creative work needs the originality and judgment that AI hasn't mastered yet.

One Senior Marketing Manager (10,000 employees, SaaS/software) testing AI for campaigns found the output bland and recycled:

"Right now, we are seeing an explosion of AI-driven content, which is bland and regurgitated. AI doesn't create original insights, it just rewords existing content."

Customer communication has subtler problems. AI produces technically accurate messages, but it can’t read emotional cues or grasp context that matters in relationship building. When prospects ask detailed questions, they want human expertise, not algorithmic responses.

One Director of Mid Market Sales (201-500 employees, SaaS/software) explained the concern:

"When a client reaches out for a detailed and nuanced request that only a human should respond with a level of expertise necessary to show an understanding of the client's request, if the client could just use AI on their own, why come to us?"

Finding your own boundaries means asking the right questions about each task. You can start with these three tests:

- List tasks where authenticity and emotional intelligence matter more than speed or scale. Ask your team where AI outputs feel wrong or inadequate. Those friction points usually point to work that needs human control.

- Test the automation question with consequences. If AI handles this task and does it poorly, what breaks? If the answer involves damaged relationships, weakened brand perception, or bad strategic bets, keep humans in charge.

- Look for work where the "how" matters as much as the "what." Creating a campaign brief involves specific information, but how you frame priorities and trade-offs shapes the entire project. Writing an email includes words and structure, but tone and timing determine whether it builds or burns a relationship.

- Make the zones explicit in your AI implementation plans. Document which tasks stay human-controlled and why. When pressure builds to automate more, you need clear reasoning to push back.

Track what automation costs you, not just what it saves. Pay attention to how automation affects relationships, brand perception, and decision quality.

Use AI where it multiplies value. Keep humans in charge where judgment, creativity, or connection matter more than speed or scale.

Key takeaway: Some work should stay human no matter how good AI gets. Creative strategy, customer relationships, and nuanced communication need judgment and emotional intelligence that AI can't replicate. Define these boundaries early so you don't automate yourself into bland content and damaged relationships.

8. Deploy AI on repetitive, data-intensive tasks first

Leaders identified the tasks they most want AI to handle. The answers mainly clustered around work that's repetitive, time-intensive, and doesn't need human creativity.

Their priorities fell into four categories:

- Routine task automation: Leaders want AI to handle workflows from start to finish, including lead assignment, seller notifications, follow-ups, and marketing tasks when leads progress.

- Content and communication support: Teams need help creating prospecting outreach and getting guidance on communication strategy.

- Pipeline generation and lead scoring: AI should outline accounts with strong buying intent, score leads accurately, and segment them into more useful lists.

- Lead generation and enrichment: Tools that find potential customers, enrich contact data, research markets, and compile relevant intelligence.

One Senior Director of Enterprise Marketing (10,000 employees, SaaS/software) talked about the time drain more clearly:

"I'd love to see an AI that automated my leads workflow from start to finish. So once a lead comes in, assign the lead to a seller, notify the seller, and notify marketing when the leads have progressed and are closed."

Another Senior Director of Demand Generation (201-500 employees, SaaS/software) pointed to the low-value work that consumes disproportionate time:

"Handle all the tasks that take time but don't provide much value—uploading event lists, doing account research, market research."

These tasks work well for automation and AI usage because they share key traits:

- Consistent steps and processes each time

- Heavy on data work, light on creative input

- Time-intensive but strategically straightforward

- Prone to human error and data privacy issues when done in high volume

To find the right tasks to automate, you’ll need a strategic approach. Here’s one framework you can use to evaluate your options:

Pick one task with clear benchmark impacts and manageable complexity for your first automation. Demonstrate it works, build confidence, and then expand from there. A win with lead scoring opens doors for harder projects later.

Key takeaway: Teams want AI to handle work that's tedious and time-consuming but strategically simple. Look for tasks your team does daily that follow the same steps every time, like lead assignment and follow-ups. A small win on high-frequency work builds more confidence than a big swing at something complicated.

💡PRO TIP: UserGems handles the tedious work your team dreads. Gem-E finds high-potential prospects, fills in their contact details, scores them based on signals, and drafts relevant outreach. Your reps skip straight to conversations instead of spending hours on manual prep work.

9. Focus on solving business problems, not just adopting AI technology

About a third of leaders (33%) in our survey prioritize solving business problems over adopting specific technologies. They deploy AI where it makes sense, but stay open to conventional solutions when those perform better.

This problem-first approach matters because the alternative creates dysfunction. Redditors in enterprise IT roles describe watching dedicated AI teams fail to deliver on practical use cases:

One Head of Digital Marketing (501-1000 employees, SaaS/software) described how they evaluate tools:

"I think I wouldn't shy away from a proven tool that isn't using AI if it's fulfilling the needs I have. I definitely am tasked with finding artificial intelligence tools to integrate into my workflows, but that's not saying I'm avoiding non-AI products.

It's the foundation of 'I just need to get my job done, what is going to help me do that?' It doesn't matter to me if it's AI, not AI, or a hamster running on a wheel. If I'm getting the job done how I need it to be, then I'll go for it."

The thinking runs backward from most implementations. Instead of asking "where can we use AI?" these teams ask "what problems need solving?" Then, they evaluate whether AI offers the best solution compared to alternatives.

Define what success looks like before you evaluate any AI solutions.

- What improves when you fix this problem?

- Which metrics change?

- What obstacles disappear?

Clarity on outcomes stops you from shopping for new technology first.

Map the problem to see if AI fits. Some problems need automation, while others need better processes, clearer communication, or extra headcount.

Compare AI to simpler options. Choose the non-AI tool if it costs less, integrates more easily, or runs more reliably. If you struggle to articulate what improves when you implement a tool, you're probably technology-shopping instead of problem-solving.

Key takeaway: The best approach works backward from the problem instead of forward from the technology. Ask what needs fixing first, then decide if AI is the right answer compared to better processes or non-AI tools. Sometimes a simpler solution costs less and works more reliably.

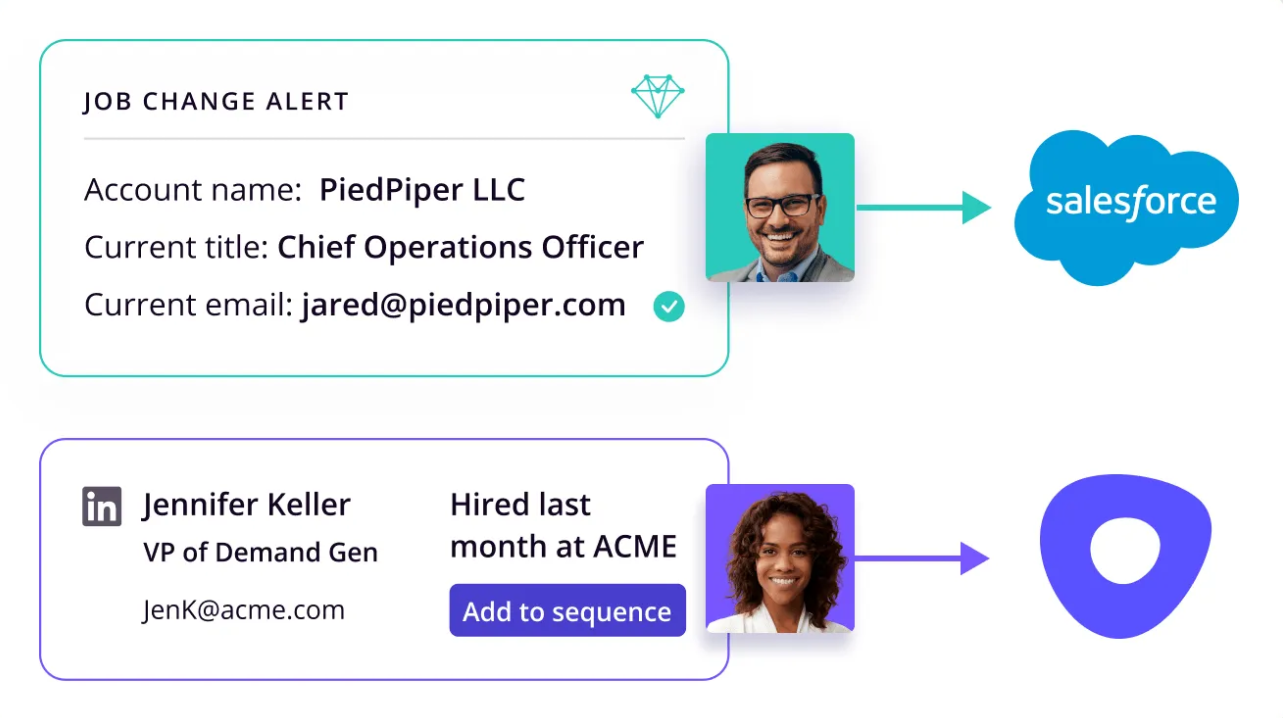

Adopt AI the smart way with UserGems

Based on our research, most teams find AI adoption messier than they anticipated. You need reliable data, specific problems to solve, and tools that support your team’s specific workflows.

UserGems builds these practices into the platform, so you can implement proven AI workflows without months of trial and error.

UserGems is an AI outbound platform that uses proprietary buying signals and an AI agent called Gem-E to find your highest-potential prospects, rank them by how likely they are to convert, and create personalized messages for email, calls, and LinkedIn outreach.

UserGems provides:

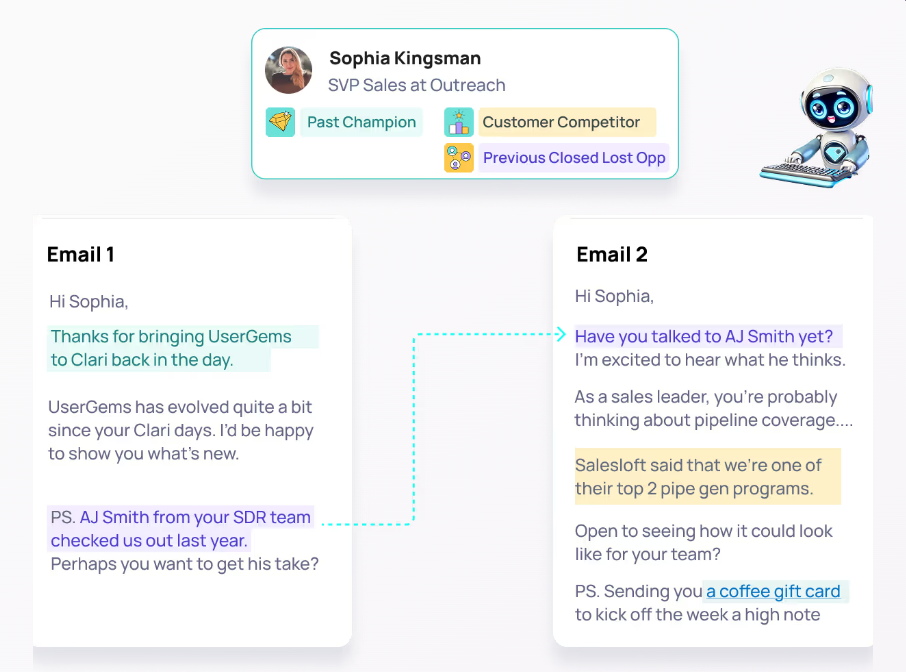

- An AI agent that handles research and messaging: Gem-E scans your CRM for past relationships and closed-lost deals, and then mixes that history with current buying signals to write sequences that sound natural. Your team gets pre-written emails and call scripts that reference job changes or competitor moves with no manual research.

- Native integration that fits your existing workflow: UserGems works inside Salesforce, HubSpot, Outreach, and Salesloft, where your team already operates. No separate dashboards to check. Signals, priorities, and AI-powered messages appear directly in the tools your reps use every day.

- Human-in-the-loop flexibility: Choose between autopilot mode for lower-priority outreach and co-pilot mode, where reps review and personalize before sending. The Chrome extension outlines all relevant signals when reps view an account, so they understand the context behind every recommendation.

- Proprietary data quality you can trust: UserGems maintains its own verification processes and training models with 95% match accuracy and sub-5% email bounce rates. Your sender reputation stays safe, and your team trusts the recommendations.

We're confident enough in UserGems' ability to generate pipeline that we back it with a revenue guarantee. If your investment doesn't generate pipeline equal to what you spend, we refund your money.

Book a demo to see how UserGems applies the AI adoption principles we covered, so your team can skip the trial-and-error phase.

FAQs

We want to be more strategic than just buying tools. How do we develop a formal AI strategy and measure its success?

Document where your team wastes time on manual work, where quality most suffers from human error, and where speed would streamline outcomes.

Build your strategy around these priorities:

- Define success metrics before you evaluate any tools (conversion rates, time saved, pipeline generated)

- Start with one high-impact use case and prove it works before expanding

- Set clear boundaries for where AI acts independently versus where humans review

- Plan for the resources you’ll need for implementation, training, and maintenance

- Set a timeline for rollout with realistic expectations for each phase

Measure results against your original problems. If lead response time improves but conversion stays flat, you need different metrics or a different deployment.

What is AI governance, and what are the first steps to ensure we are practicing responsible AI?

AI data governance creates the framework for safe AI use across your organization. It defines boundaries, approval processes, and monitoring systems that prevent problems while letting your team move fast.

You can start with these steps:

- Document which data AI can access and what stays off-limits (customer PII, proprietary information)

- Define approval processes for new AI tools before anyone on your team deploys them

- Establish review checkpoints where humans verify AI outputs before they reach customers

- Create a response plan for when AI makes mistakes or produces inappropriate outputs

Keep an eye on your AI tools as they work. Check message quality regularly, look for bias in how it scores accounts, and pay attention when customers complain. Good governance means staying involved.

Our team uses tools like ChatGPT, but how is that different from implementing more advanced AI models for sales and marketing?

ChatGPT and similar tools are general-purpose AI chatbot built for broad tasks like writing, brainstorming, and research. They work well for one-off requests but don't connect to your business data, understand your customers, or integrate with your workflows.

Business-specific AI tools are built for your exact use case. They connect to your CRM, learn from your customer data, and understand what makes your buyers convert. With some tools, you can automate entire workflows.

The difference comes down to integration and intelligence. Generative AI helps individuals work faster, while business-specific AI connects data, automates processes, and learns what works specifically for your company.